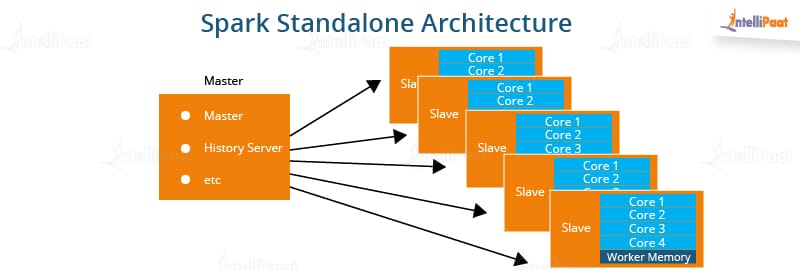

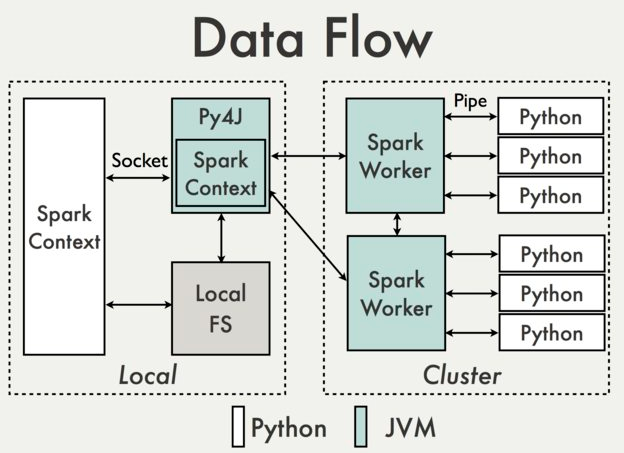

If you don’t remenber how to do that, you can check the last section ofįor the sake of stability, I chose to install version 2.3.2. Make sure an SSH connection is established. Connect via SSH on every node except the node named Zookeeper : Java should be pre-installed on the machines on which we have to run Spark job. Standalone mode is good to go for developing applications in Spark. Both driver and worker nodes run on the same machine. This is the simplest way to deploy Spark on a private cluster. Along with that, it can be configured in standalone mode.įor this tutorial, I choose to deploy Spark in Standalone Mode. Spark can be configured with multiple cluster managers like YARN, Mesos, etc. The goal of this final tutorial is to configure Apache-Spark on your instances and make them communicate with your Apache-Cassandra Cluster with full resilience. Launch your Master and your Slave nodes.Add dependencies to connect Spark and Cassandra.This tutorial will be divided into 5 sections.

The “election” of the primary master is handled by Zookeeper. We’ll go through a standard configuration which allows the elected Master to spread its jobs on Worker nodes. This topic will help you install Apache-Spark on your AWS EC2 cluster.

Add dependencies to connect Spark and Cassandra

0 kommentar(er)

0 kommentar(er)